Quantization (16-bit, 8-bit, 4-bit) and QLoRA

Quantization is another technique to reduce the memory footprint of transformer models. In this article, we first discuss how real numbers are represented in computers as a binary sequence and the memory requirements of transformer models. Next, we describe quantization using 16-bit, 8-bit, and finally 4-bit using qLoRA.

Storage Types

The weight or computation parameters in a language model could be represented as the following different types:

- float32 (FP32): 4 bytes, full precision. 8 bits exponent, 23 bits mantissa.

- float16 (FP16): 2 bytes, half precision. 5 bits exponent, 10 bits mantissa.

- bfloat16 (BF16): 2 bytes, half precision. 8 bits for exponent, 7 bits for fraction.

- int8 (INT8): 8 bit, storing $2^8$ different values. [0, 255] or [-128, 127]

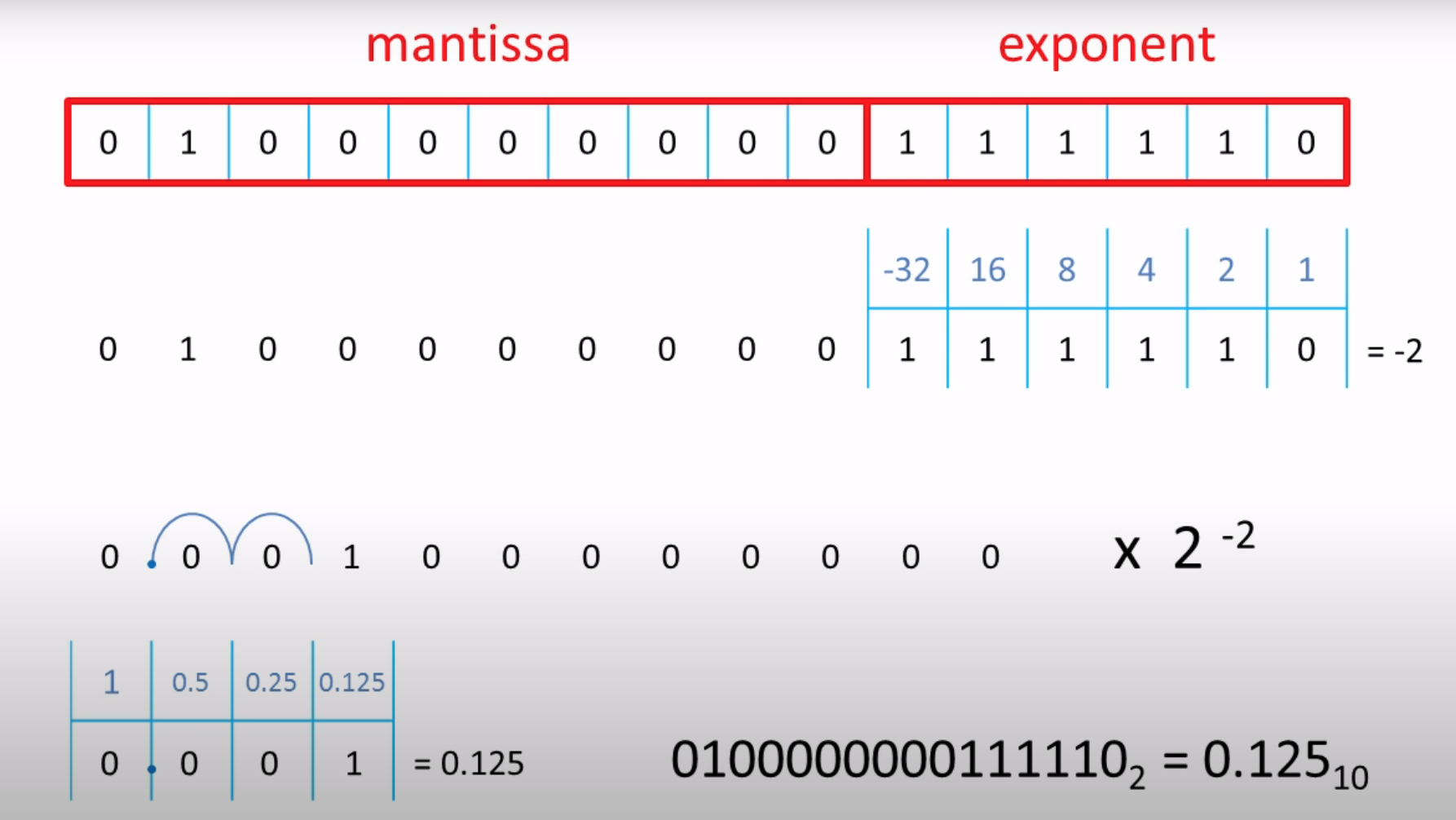

Mantissa and Exponent Interpretation for a Real Number

As an example of how to interpret a binary sequence of mantissa and exponent, to a real number, refer to the figure below. In the figure, we illustrate by representing the exponent using 6 bits and the mantissa using 10 bits:

- Positive/negative: The first bit of the mantissa determines whether the mantissa value is positive or negative. If the first (left-most) mantissa bit is 0, then the real number value is positive. If the first mantissa bit is 1, then the real number value is negative. The first (left-most) exponent bit similarly indicates whether the exponent is positive (bit-value “0”) or negative (bit-value “1”).

- To interpret the binary sequence in the figure, first calculate the exponent value: $-32 + 16 + 8 + 4 + 2 = -2$

- Then, imagine that there is an imaginary dot $\cdot$ on the right of the left-most mantissa bit. Since the exponent is $-2$, we shift this dot 2 steps to the left, resulting in the binary sequence “0 0 0 1” (as shown in the bottom left of the figure). This translates to a final result of 0.125

Memory Requirements of a Language Model

Given a model that has e.g. 7B weight parameters, how much memory does it consume during fine-tuning to represent its weights, gradients, and optimizer variables?

- Each weight parameter is usually represented by 32-bit float, hence requiring 4 bytes per parameter.

- Each parameter has an associated gradient using the same (e.g. 32-bit/4-byte) representation.

- Adam is usually used as a SOTA optimizer, which keeps track of two variables (momentum, variance), each requiring e.g. 32-bit float as well.

Hence, if we naively use 32-bit floats everywhere, the 7B model will require 7x4 (weights), 7x4 (gradient), and 7x4 (optimizer variables) = 84GB memory for fine-tuning.

Reducing Memory using Half-Precision and 8-bit Representation

Half-Precision

For training, we can represent the weights in FP32, while computations for the forward and backword pass are done in FP16/BF16 for faster training. Similarly, FP16/BF16 gradients are then used to update the FP32 weights.

For inference, researchers had found that half-precision (i.e. 16-bit) weights often provide similar quality as using FP32.

8-bit Quantization

For futher memory reduction, we can map floating point values into int8 (1 byte) values. An examplee of a common 8-bit quantization technique is the zero-point quantization:

- E.g. to map a FP value $x$ from [-1.0, 1.0] to the range of [-127, 127], do: $\text{int}(127x)$. E.g. given $x=0.3$, then $\text{int}(0.3*127)=38$

- To reverse: $38/127 = 0.2992$, so we have a quantization error of 0.008.

However, note that the FP16 values are not uniformly distributed across the entire input range. Instead, they are usually concentrated within a narrow range, with just a few outlier values. Taking advantage of this fact, researchers in the LLM.int8() paper “LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale” (August-2022) introduced a two-part quantization procedure, which they call LLM.int8(), to convert FP16 into int8 without performance degradation.

I.e. they show that it is possible to load a 16/32-bit LLM checkpoint, convert it into Int8, and then perform inference without any performance degradation vs performing inference in FP16. While this reduces inference memory consumption, it comes at a slight cost to inference speed. The researchers mentioned that w.r.t. the BLOOM-176B, generation with LLM.int8() is about 15% to 23% slower than performing inference using FP16.

Basic way to load a model in 8-bit:

model = AutoModelForCausalLM.from_pretrained("bigscience/bloom-3b", load_in_8bit=True, device_map="auto")

QLoRA for 4-bit Quantization

4-bit quantization for LoRA was introduced in the paper “QLoRA: Efficient Finetuning of Quantized LLMs” (May-2023) by researchers from Washington University. It introduces 3 innovations: (i) 4-bit NormalFloat (NF4), a new data type that is optimal for normally distributed weights (ii) double quantization to reduce the average memory footprint by quantizing the quantization constants, and (iii) paged optimziers which does automatic page-to-page transfers between the CPU and GPU when the GPU occasionally runs out-of-memory. The basic way to load a model in 4-bit is:

model = AutoModelForCausalLM.from_pretrained("bigscience/bloom-3b", load_in_4bit=True, device_map="auto")

Or, you can specify more config settings when loading a model in 4-bits like so:

from transformers import BitsAndBytesConfig

nf4_config = BitsAndBytesConfig(

load_in_4bit=True,

bnb_4bit_quant_type="nf4", # NF4 enables higher precision

bnb_4bit_use_double_quant=True, # save more memory

bnb_4bit_compute_dtype=torch.bfloat16 # 16-bit instead of 32-bit for faster training

)

model_nf4 = AutoModelForCausalLM.from_pretrained(model_id, quantization_config=nf4_config)

In the above configuration:

load_in4bit=Trueandbnb_4bit_quant_type="nf4": loads the pre-trained model parameter weights in 4-bit using NF4 quantization. These parameters are frozen and not fine-tuned.bnb_4bit_use_double_quant=True: uses a second quantization after the first one to save an additional 0.4 bits per parameterbnb_4bit_compute_dtype=torch.bfloat16: uses BF16 for faster training computations

During fine-tuning, the 4NF parameters are dequantized to 16BF to perform forward and backword computations. Hence, weights are only decompressed from 4NF to 16BF when needed, thus saving memory usage during training and inference. For fine-tuning of the LoRA parameter weights, the computations and gradients are still using 16-bits (16BF).

NOTE:

- It is not possible to perform 4-bit or 8-bit training. So if you are loading a model in 4-bit or 8-bit, then you must use an adapter such as LoRA to perform the fine-tuning.

- From the blog article https://huggingface.co/blog/4bit-transformers-bitsandbytes: “any GPU could be used to run the 4bit quantization as long as you have CUDA>=11.2 installed”

- Any model that supports accelerate loading (i.e. supports the

device_mapargument when callingfrom_pretrained) should be quantizable in 4bit. - The blog article https://huggingface.co/blog/4bit-transformers-bitsandbytes mentioned “we recommend users to not manually set a device once the model has been loaded with

device_map.”

qLoRA Memory Usage

If we leverage qLoRA to load an example 7B model in 4-bits, and then assuming qLoRA fine-tunes only about 0.2% of the total number of parameters (~14M parameters).

- Then we just need 3.5GB for the froze 7B parameters.

- Assuming the computation is done in 16-bits for the 14M parameters that we fine-tune for, this requires just 28MB for the gradient, and 14M * 2-bytes * 2-optimizer-variables (=56MB).

- So we need less than 4GB of ram to fine-tune a 7B model.