Mistral 7B

On 10/10/2023, researchers from the newly formed Mistral.ai introduced a paper titled “Mistral 7B”, which described a 7B language model.

- The model leverages grouped-query attention and sliding window attention for improved inference speed and reduced memory consumption.

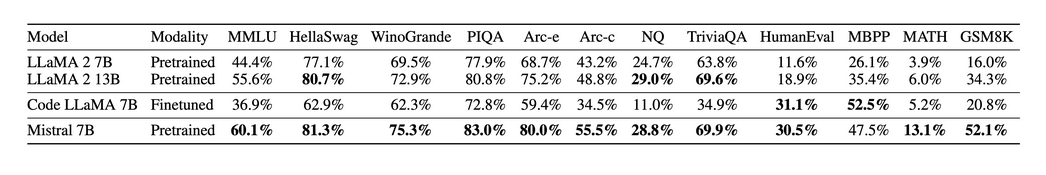

- The authors evaluated on a wide variety of tasks that can be categorized as: commonsense reasoning, world knowledge, reading comprehension, math, code, popular aggregated results.

- The following Figure from the paper shows that Mistral 7B outperforms Llama-2-7B and Llama-2-13B.

Unfortunately, the paper provides no details on the datasets used to pretrain the Mistral model.

Written on December 3, 2023